Ron Huddleston, corporate vice president of the Microsoft One Commercial Partner (OCP) worldwide organization, is going on an indefinite family leave a little less than a year into the job, and Gavriella Schuster will step into the role.

Schuster previously reported to Huddleston as corporate vice president for worldwide channels and programs, and was also considered Microsoft's "worldwide channel chief," a semi-official designation that she had also held prior to Huddleston's arrival in OCP when she was CVP of the now-discontinued Worldwide Partner Group (WPG).

Huddleston, a former senior executive at Oracle and more recently at Salesforce.com where he helped set up the AppExchange business app marketplace, got the nod to lead the newly formed OCP in early 2017. He moved over to the partner role from an initial posting in the Microsoft Dynamics organization in the summer of 2016.

The OCP worldwide organization reports to Judson Althoff, executive vice president for the Microsoft Worldwide Commercial Business. Forming OCP consolidated channel efforts that were previously spread throughout different divisions and groups inside Microsoft. A particular focus was bringing developer enablement into the main channel organization.

This leadership change late in calendar 2017 caps a tumultuous year for Microsoft field and channel employees, as well as the Microsoft partners who interact with them. The creation of the OCP early in the year served as a precursor for a massive reorganization throughout the Microsoft field, with layoffs and reassignments occurring through the summer and the fall.

It remains to be seen what the change will mean for some of the initiatives that Huddleston championed, especially the Solution Maps, also known as OCP Catalogs, which were lists of go-to partners in different geographies and vertical industries for various elements of a solution. Much of the field reorganization involved the staffing of the new Channel Manager roles, which had responsibility for maintaining the maps.

The other major OCP initiative since its creation was to reorganize all Microsoft partner employees and efforts around three motions -- build-with, sell-with and go-to-market.

Posted by Scott Bekker on December 11, 20170 comments

A year after unveiling a Microsoft-Qualcomm partnership to bring Windows 10 to the Snapdragon platform and create a new class of Windows on ARM devices, ASUS and HP are showing off systems with shipments set to begin next year.

The ASUS NovaGo, a 2-in-1 convertible, will be available in early 2018. The HP Envy x2 Windows on Snapdragon Mobile PC is supposed to ship in the spring. Both devices were on display for hands-on use at Qualcomm's Snapdragon Technology Summit in Hawaii this week.

Here are six big takeaways from the Windows on Snapdragon developments:

1. This is a category-creation effort.

This is an important new platform for Windows 10 and a potentially significant new class of devices for business and home use. For a while, the market has been mostly segmented into PCs, tablets and smartphones. The Snapdragon-based devices could create some crossover possibilities in the in-between spaces -- less expensive, bulky or battery-draining than even the smallest and lightest 2-in-1 PCs but more capable than tablets or larger smartphones.

2. It's not Microsoft's first ARM rodeo.

Microsoft did try to do Windows on ARM before with the Surface RT, and it was not a hit. Surface RT eventually went away and Microsoft took some bruising earnings writeoffs in the process. The major limitation of the RT platform was its inability to run regular Windows applications, having only supported modern apps. Those apps had usability problems, suffered from developer disinterest, and buyers were confused about the incompatibilities.

The new ASUS NovaGo.

The new ASUS NovaGo.

This time Microsoft is coming at the ARM category in a more traditional way for Redmond -- working closely with the platform provider and enabling OEM partners. The new devices will come pre-installed with Windows 10 S, which has some app compatibility limitations of its own, although they are not as severe as the ones RT presented. Windows 10 Pro is supposed to be an upgrade option, as well.

3. The platform provides some interesting capabilities.

Undergirding the Windows 10 on Snapdragon systems is the Qualcomm Snapdragon 835 Mobile PC platform, as well as Qualcomm LTE modems. Microsoft bills the systems as Always Connected PCs. Terry Myerson, executive vice president of the Windows and Devices group at Microsoft, presented the platform pitch this way: "Always Connected PCs are instantly on, always connected with a week of battery life...these Always PCs have huge benefits for organizations, enabling a new culture of work, better security and lower costs for IT."

In the case of the NovaGo, ASUS is claiming 22 hours of battery life and 30 days of standby. Adding in capabilities from the Snapdragon X16LTE modem, as well as on-board Wi-Fi and other technologies, Samsung says the system is capable of LTE download speeds of up to 1Gbps and Wi-Fi download speeds of up to 867Mbps. In other words, LTE downloads are faster than Wi-Fi downloads. ASUS claims an LTE download for a two-hour movie is around 10 seconds.

4. Designs aren't breaking new ground yet.

Designs shown so far are variations on existing PCs from ASUS and HP. While there is the potential for some rethinking of the PC based on the technology platform, the first few models are pretty familiar.

5. Performance is worth watching.

Performance will be an issue, which raises questions about where these systems will find their niche. Early reviews indicate the PCs will be plenty fast enough for Web browsing and e-mail. But the Windows emulation overhead, along with the repurposing of mobile chipsets for PC use, mean the systems won't be as capable as run-of-the-mill PCs at offline processing.

6. Price is a big question.

The other issue determining who ultimately buys these Windows 10 on Snapdragon devices is price. ASUS is charging $599 for 4GB of RAM and 64GB of storage and $799 for 8GB of RAM and 256GB of storage. That's fairly steep to compete with Chromebooks and even mid-range Windows PCs.

On the other hand, LTE connectivity is a wild card in valuing the system. HP and Lenovo (which is also working on a device) haven't released their pricing yet. Where those device prices land will indicate where the Windows 10 on Snapdragon category might find its niche.

Posted by Scott Bekker on December 06, 20170 comments

During an Ask Me Anything (AMA) session this week aimed at new partners, Microsoft gave the hard sell for its Cloud Enablement Desk benefit.

Microsoft hosted the AMA session, called "Partnering with Microsoft," on its Microsoft Partner Community page on Wednesday after taking questions for eight days. Fielding questions for the session was John Mighell, Global Breadth Partner Enablement Lead at Microsoft, who is also responsible for the MPC Partnership 101 discussion board.

As Microsoft emphasizes cloud service sales and consumption in employee compensation and in investor relations, the company is tweaking Microsoft Partner Network (MPN) programs, competencies and incentives to encourage partners to drive cloud sales. The Cloud Enablement Desk is a relatively new and little-known part of that broader effort.

First discussed around the beginning of this calendar year, the Cloud Enablement Desk is a team of Microsoft employees who are standing by to help both brand-new partners -- even those who signed up for free in the Microsoft Partner Network (MPN) -- as well as longtime partners get going with Microsoft's cloud.

"This is a new and fantastic resource team specifically for supporting non-managed partners as they navigate MPN and build a practice," Mighell said in the AMA. Managed partners are those few among Microsoft's hundreds of thousands of channel partners who have Microsoft field salespeople dedicated to their performance.

Mighell indicated that the desk is a work in progress with continuous investment: "As this is a new process, we are adding additional services and expertise to this team constantly."

Some of the services currently offered through the desk are for MPN's free, lowest tier -- Network Member. Those partners who nominate themselves for cloud desk help are supposed to be contacted by a Cloud Program Specialist within 48 hours with assistance for getting started in the MPN and for attaining a cloud competency. Microsoft's cloud competencies include Cloud Customer Relationship Management, Cloud Productivity, Cloud Platform, Enterprise Mobility and Management, and Small and Midmarket Cloud Solutions.

Partners who pay for a Microsoft Action Pack Subscription or who work through the requirements of, and pay for, one of the competencies are eligible for a little more hands-on help from the desk, including a Cloud Mentor Program. The Action Pack costs $475 per year, while the silver cloud competency fee in the United States is $1,670.

In response to a different question in the AMA -- the evergreen matter for low-profile partners of how to get 1:1 attention from Microsoft's field -- Mighell implied that the cloud desk may be something of a shortcut. "Getting involved with the Cloud Enablement Desk (see my previous reply) is one way to get connected in with local sales and enablement efforts," he said.

Posted by Scott Bekker on November 30, 20170 comments

Global technology companies like Microsoft and SAP SE of course often double as marquee clients for one other.

Taking advantage of their scale, prominence and ongoing strategic partnership, SAP and Microsoft this week announced a new dogfooding arrangement. The companies will each put their joint engineering efforts to get the SAP HANA Enterprise Cloud running on Microsoft Azure to work internally on running parts of their actual businesses.

The partnership to get SAP's enterprise ERP products onto Azure has been publicly discussed for over a year, and fits within a long history of cooperation in pursuit of joint business opportunities in spite of other areas where the companies directly compete. When the joint work on Azure was initially announced, for example, Microsoft and SAP also outlined an arrangement to more tightly integrate SAP offerings with Microsoft Office 365, upsetting some Microsoft partners for whom Office 365 compatibility with Microsoft Dynamics ERP products was a competitive differentiator.

Nor is SAP exclusive to Microsoft in this instance. The company has worked with Amazon Web Services (AWS) since 2011 to get its enterprise cloud products onto that public cloud and is also engaged with the Google Cloud Platform (GCP).

What's qualitatively different about the new Microsoft-SAP arrangement is the public description of the real internal systems where each company will deploy the joint solution.

Microsoft described itself as transforming its internal systems to implement the SAP S/4HANA Finance solution on Azure. The company said those internal systems include legacy SAP finance applications. The use of the word "include" implies other legacy systems are being swept up in the transformation, but those systems aren't disclosed. The plan for Redmond also calls for connecting SAP S/4HANA to Azure AI and analytics services.

SAP is committing to migrate more than a dozen business-critical systems to Azure. While it doesn't look like SAP is putting anything as central as its internal financial applications on the platform since that's not specifically mentioned, SAP will run its SaaS-based travel and expense management company, Concur, off of SAP S/4HANA on Azure. That is no small commitment, as SAP paid $8.3 billion to acquire Concur Technologies in 2014. Also, as part of the announcement, the supply chain management business SAP Ariba, already an Azure customer, will explore expanding Azure usage within its procurement applications.

On the Microsoft side, the dogfooding of the SAP S/4HANA software will certainly lead to continuing awkward questions from both customers and partners, who wonder why Microsoft doesn't bet its own business on Dynamics 365 for Finance and Operations. In the past, Microsoft officials have suggested to Dynamics AX users that the company committed to SAP before it had its own enterprise-scale ERP offering and the extensive customized applications associated with the SAP system made a migration to its own ERP solution impractical.

In fact, the complexity of migrating its own heavily customized SAP implementation into the SAP HANA for Azure environment should prove useful for joint SAP-Microsoft customers such as The Coca-Cola Company, Columbia Sportswear Company and Costco Wholesale Corp.

Look to both Microsoft and SAP for many tips and tricks, detailed migration guidance and other best practices from the dogfooding experience. In addition to co-engineering, go-to-market activities and joint support services, the companies are promising extensive documentation from their internal deployments.

Posted by Scott Bekker on November 29, 20170 comments

Hewlett Packard Enterprise (HPE) unveiled five packaged workload solutions that will be billed like cloud services but reside on-premises.

The pay-per-use offerings are called HPE GreenLake, and the initial set will include Big Data, backup, open database, SAP HANA and edge computing. HPE announced them Monday during its Discover conference in Madrid.

"HPE GreenLake offers an experience that is the best of both worlds -- a simple, pay-per-use technology model with the risk management of data that's under the customer's direct control," said Ana Pinczuk, senior vice president and general manager of HPE Pointnext, in a statement.

Pinczuk presented the solutions as the next step in consumption after GreenLake Flex Capacity, HPE's existing offering of on-premises infrastructure that is paid for on an as-used basis. The GreenLake Flex Capacity offering is also being expanded, according to the announcements Monday, to include more technology choices, including Microsoft Azure Stack, HPE SimpliVity or high-performance computing (HPC).

Still fluid are details on availability of the various packages and clear lines on which products will be sold to which categories of customers by the channel versus HPE direct.

Here is how HPE describes its five new outcome-based GreenLake solutions:

HPE GreenLake Big Data offers a Hadoop data lake, pre-integrated and tested on the latest HPE technology and Hortonworks or Cloudera software.

HPE GreenLake Backup delivers on-premises backup capacity using Commvault software pre-integrated on the latest HPE technology with HPE metering technology and management services to run it.

HPE GreenLake Database with EDB Postgres delivered on-premises and built on open source technology to help simplify operations and substantially reduce total cost of ownership for a customer's entire database platform.

HPE GreenLake for SAP HANA offers an on-premises appliance operated by HPE with the right-sized, SAP-certified hardware, operating system, and services to meet workload performance and availability objectives.

HPE GreenLake Edge Compute offers an end-to-end lifecycle framework to accelerate a customer's Internet of Things (IoT) journey.

The HPE conference and GreenLake announcements come a week after HPE announced that CEO Meg Whitman will step down from the CEO role on Feb. 1, 2018, while retaining her seat on the HPE board of directors. Her replacement will be current HPE President Antonio Neri, who will become president and CEO and a member of the board.

Posted by Scott Bekker on November 27, 20170 comments

SkyKick and Ingram Micro now offer a bundle of Office 365, SkyKick Cloud Backup and the SkyKick Migration Suite that Microsoft Cloud Solution Provider (CSP) Indirect Resellers can buy from Ingram and sell to customers on a monthly billing basis.

The companies launched the bundle at the IT Nation show in Orlando, Fla., this month.

"We've seen across partners across the world that the more we can reduce friction, the more we can accelerate their business," said Chike Farrell, vice president of marketing at SkyKick, in explaining the thinking behind creating the bundle.

In the Ingram Marketplace, the bundle's official name is "Office 365 with SkyKick Migration & Backup."

If you think about the lifecycle of a customer cloud engagement, the bundle starts with the SkyKick Migration Suite for moving e-mail and data from existing systems into Office 365. The next element is Office 365 itself, which is available in the bundle from Ingram (a CSP Indirect Provider) in any of four versions. The final component for ongoing management is SkyKick's 2-year-old Cloud Backup for Office 365 product, which includes unlimited data storage, up to six daily automatic backups and one-click restore. Also included with the bundle is 24-hour SkyKick phone and e-mail support.

"For partners, it ties nicely into their business model. There's no break between Office 365 and how it's sold," said Peter Labes, vice president of business development at SkyKick. Labes added that SkyKick hopes the bundle will become Ingram's "hero SKU" for CSP Indirect Resellers of Office 365.

The bundle is initially available in the United States, but the companies plan to add other geographies.

Posted by Scott Bekker on November 17, 20170 comments

A new survey of 500 U.S. organizations shows IT decision-makers are worried about the effectiveness and frequency of their data backups.

Data management and protection specialist StorageCraft commissioned the study with a third-party research organization.

The survey had three key findings.

Instant Data Recovery: More than half of respondents (51 percent) lacked confidence in the ability of their infrastructure to bring data back online after a failure in a matter of minutes.

Data Growth: The survey results suggested that organizational data growth is out of control. Some 43 percent of respondents reported struggling with data growth. The issue was even more pronounced for organizations with revenues above $500 million. In that larger subset, 51 percent reported data growth as a problem.

Backup Frequency: Also in the larger organization subset, slightly more than half wanted to conduct backups more frequently but felt their existing IT infrastructure prevented it.

In an interview, about the results, Douglas Brockett, president of Draper, Utah-based StorageCraft, said the sense of uncertainty among U.S. customers points to opportunities for the channel.

"Despite all the work that's been done, we still had a significant percentage of the IT decision-makers who weren't confident that they could have an RTO [recovery time objective] in minutes rather than hours," Brockett said. "Channel partners have an 'in' here, where they can help these customers get a more robust infrastructure."

Posted by Scott Bekker on November 16, 20170 comments

Microsoft Visual Studio Team Services/Team Foundation Server (VSTS/TFS) isn't just a toolset for DevOps; the large team at Microsoft behind the products is a long-running experiment in doing DevOps.

During the main keynote at the Live! 360 conference in Orlando, Fla., this week, Buck Hodges shared DevOps lessons learned at Microsoft scale. While Microsoft has tens of thousands of developers engaged to varying degrees in DevOps throughout the company, Hodges, director of engineering for Microsoft VSTS, focused on the 430-person team developing VSTS/TFS.

VSTS and TFS, which share a master code base, provide a set of services for software development and DevOps, providing services such as source control, agile planning, build automation, continuous deployment, continuous integration, test case management, release management, package management, analytics and insights, and dashboards. Microsoft updates VSTS every three weeks, while the schedule for new on-premises versions of TFS is every four months.

Hodges' narrower lens on the VSTS/TFS team provides a lengthy and deep set of experiences around DevOps. Hodges started on the TFS team in 2003 and helped lead the transformation into cloud as a DevOps team with VSTS. The group's real trial by fire in DevOps started when VSTS went online in April 2011.

"That's the last time we started from scratch. Everything's been an upgrade since then. Along the way, we learned a lot, sometimes the hard way," Hodges said.

Here are 15 DevOps tips gleaned from Hodges' keynote. (Editor's Note: This article has been updated to remove an incorrect reference to "SourceControl.Revert" in the third tip.)

1. Use Feature Flags

The whole point of a fast release cycle is fixing bugs and adding features. When it comes to features, Microsoft is using a technique called feature flags that allows them to designate how an individual feature gets deployed and to whom.

"Feature flags have been an excellent technique for us for both changes that you can see and also changes that you can't see. It allows us to decouple deployment from exposure," Hodges said. "The engineering team can build a feature and deploy it, and when we actually reveal it to the world is entirely separate."

[Click on image for larger view.] Buck Hodges, director of engineering for Microsoft Visual Studio Team Services, makes the case for feature flags as a key element of DevOps.

[Click on image for larger view.] Buck Hodges, director of engineering for Microsoft Visual Studio Team Services, makes the case for feature flags as a key element of DevOps.

The granularity allowed by Microsoft's implementation of feature flags is surprising. For example, Hodges said the team added support for the SSH protocol, which is a feature very few organizations need, but those that do need it are passionate about it. Rather than making it generally available across the codebase, Hodges posted a blog asking users who needed SSH to e-mail him. The VSTS team was able to turn on the feature for those customers individually.

2. Define Some Release Stages

In most cases, Microsoft will be working on features of interest to more than a handful of customers. By defining Release Stages, those features can get flagged for and tested by larger and larger circles of users. Microsoft's predefined groups before a feature is fully available are:

- Stage 0: internal Microsoft

- Stage 1: a few external customers

- Stage 2: private preview

- Stage 3: public preview

3. Use a Revert Button

Wouldn't it be nice to have a big emergency button allowing you to revert from a feature if it starts to cause major problems for the rest of the code? That's another major benefit of the feature flag approach. In cases where Microsoft has found that a feature is causing too many problems, it's possible to turn the feature flag off. The whole system then ignores the troublesome feature's code, and should revert to its previous working state.

4. Make It a Sprint, not a Marathon

In its DevOps efforts around VSTS/TFS, Microsoft is working in a series of well-defined sprints, and that applies to the on-premises TFS, as well as the cloud-based VSTS. You could think of the old Microsoft development model as a marathon, working on thousands of changes for an on-premises server and releasing a new version every few years.

The core timeframe for VSTS/TFS is three weeks. At the end of every sprint, Microsoft takes a release branch that ships to the cloud on the service. Roughly every four months, one of those release branches becomes the new release of TFS. This three-week development motion is pretty well ingrained. The first sprint came in August 2010. In November 2017, Microsoft was working on sprint No. 127.

5. Flip on One Feature at a Time

No lessons-learned list is complete without a disaster. The VSTS team's low point came at the Microsoft Connect() 2013 event four years ago. The plan was to wow customers with a big release. An hour before the keynote, Microsoft turned on about two dozen new features. "It didn't go well. Not only did the service tank, we started turning feature flags off and it wouldn't recover," Hodges said, describing the condition of the service as a "death spiral."

It was two weeks before all the bugs were fixed. Since then, Microsoft has taken to turning on new features one at a time, monitoring them very closely, and turning features on completely at least 24 hours ahead of an event.

6. Split Up into Services

One other big change was partly a response to the Microsoft Connect() 2013 incident. At the time of the big failure, all of VSTS ran as one service. Now Microsoft has split that formerly global instance of VSTS into 31 separate services, giving the product much greater resiliency.

7. Implement Circuit Breakers

Microsoft took a page out of the Netflix playbook and implemented circuit breakers in the VSTS/TFS code. The analogy is to an electrical circuit breaker, and the goal is to stop a failure from cascading across a complex system. Hodges said that while fast failures are usually relatively straightforward to diagnose, it's the slow failures in which system performance slowly degrades that can present the really thorny challenges.

The circuit breaker approach has helped Microsoft protect against latency, failure and concurrency/volume problems, as well as shed load quickly, fail fast and recover more quickly, he said. Additionally, having circuit breakers creates another way to test the code: "Let's say we have 50 circuit breakers in our code. Start flipping them to see what happens," he said.

Hodges offered two warnings about circuit breakers. One is to make sure the team doesn't start treating the circuit breakers as causes rather than symptoms of an event. The other is that it can be difficult to understand what opened a circuit breaker, requiring thoughtful and specialized telemetry.

8. Collect Telemetry

Here's a koan for DevOps: The absence of failure doesn't mean a feature is working. In a staged rollout environment like the one Microsoft runs, traffic on new features is frequently low. As the feature is exposed through the larger concentric circles of users in each release stage, it's getting more and more hits. Yet a problem may not become apparent until some critical threshold gets reached weeks or months after full availability of a feature.

In all cases, the more telemetry the system generates, the better. "When you run a 24x7 service, telemetry is absolutely key, it's your lifeblood," Hodges said. "Gather everything." For a benchmark, Microsoft is pulling in 7TB of data on average every day.

"When you run a 24x7 service, telemetry is absolutely key, it's your lifeblood. Gather everything."

"When you run a 24x7 service, telemetry is absolutely key, it's your lifeblood. Gather everything."

Buck Hodges, Director of Engineering, Microsoft Visual Studio Team Services

9. Refine Alerts

A Hoover-up-everything approach is valuable when it comes to telemetry so that there's plenty of data available for pursuing root causes of incidents. The opposite is true on the alert side. "We were drowning in alerts," Hodges admitted. "When there are thousands of alerts and you're ignoring them, there's obviously a problem," he said, adding that too many alerts makes it more likely you'll miss problems. Cleaning up the alert system was an important part of Microsoft's DevOps journey, he said. Microsoft's main rules on alerts now are that every alert must be actionable and alerts should create a sense of urgency.

10. Prioritize User Experience

When deciding which types of problems to prioritize in telemetry, Hodges said Microsoft is emphasizing user experience measurements. Early versions might have concluded that performance was fine as long as user requests weren't failing. But understanding user experience expectations, and understanding those thresholds when a user loses his or her train of thought due to a delay, makes it important to not only measure failure of requests but to also recognize a problem if a user request takes too long. "If a request takes more than 10 seconds, we consider that a failed request," Hodges said.

11. Optimize DRIs' Time

Microsoft added a brick to the DevOps foundation in October 2013 with the formalization of designated responsible individuals, or DRIs. Responsible for rapid response to incidents involving the production systems, the DRIs represent a formalization on the operations side of DevOps. In Microsoft's case, the DRIs are on-call 24/7 on 12-hour shifts and are rotated weekly. In the absence of incidents, DRIs are supposed to conduct proactive investigation of service performance.

In case of an incident, the goal is to have a DRI on top of the issue in five minutes during the day and 15 minutes at night. Traditional seniority arrangements result in the most experienced people getting the plum day shifts. Microsoft has found that flipping the usual situation works best.

"We found that at night, inexperienced DRIs just needed to wake up the more experienced DRI anyway," Hodges said. As for off-hours DRIs accessing a production system, Microsoft also provides them with custom secured laptops to prevent malware infections, such as those from phishing attacks, from working their way into the system and wreaking havoc.

12. Assign Shield Teams

The VSTS/TFS team is organized into about 40 feature teams of 10 engineers and a program manager or two. With that aggressive every-three-weeks sprint schedule, those engineers need to be heads down on developing new features for the next release. Yet if an incident comes up in the production system involving one of their features, the team has to respond. Microsoft's process for that was to create a rotating "shield team" of two of the 10 engineers. Those engineers are assigned to address or triage any live-site issues or other interruptions, providing a buffer that allows the rest of the team to stay focused on the sprint.

13. Pursue Multiple Theories

In the case of a live site incident, there's usually a temptation to seize on a theory of the problem and dedicate all the available responders to pursuing that theory in the hopes that it will lead to a quick resolution. The problem comes when the theory is wrong. "It's surprising how easy it is to get myopic. If you pursue each of your theories sequentially, it's going to take longer to fix the problem for the customer," Hodges said. "You have to pursue multiple theories."

In a similar vein, Microsoft has found it's important and helpful to rotate out incident responders and bring in fresh, rested replacements if an incident goes more than eight or 10 hours without a resolution.

14. Combine Dev and Test Engineer Roles

One of the most critical evolutions affecting DevOps at Microsoft over the past few years involved a companywide change in the definition of an engineer. Prior to combining them in November 2014, Microsoft had separate development engineer and test engineer roles. Now developers who build code must test the code, which provides significant motivation to make the code more testable.

15. Tune the Tests

The three-week-sprint cycle led to a simultaneous acceleration in testing processes, with the biggest improvements coming in the last three years. As of September 2014, Hodges said the so-called "nightly tests" required 22 hours, while the full run of testing took two days.

A new testing regimen breaks down testing into different levels. A new test taxonomy allows Microsoft to run the code against progressively more involved levels, allowing simple problems to be addressed quickly. The first level tests only binaries and doesn't involve any dependencies. The next level adds the ability to use SQL and file system. A third level tests a service via the REST API, while the fourth level is a full environment to test end to end. The upshot is that Microsoft is running many, many more tests against the code in much less time.

Posted by Scott Bekker on November 16, 20170 comments

Partners pitching business intelligence (BI) solutions to business users rather than database administrators (DBAs) appear to have a committed new ally in Microsoft.

When looking at Microsoft's recent enhancements to its BI platforms, the lack of rows, columns and even traditional data management terminology makes it evident that the changes aren't aimed at DBAs.

"Our No. 1 persona, our No. 1 person that we're building for, is the business user," said Charles Sterling, senior program manager in the Microsoft Business Applications Group, during his keynote for the SQL Server Live! track of the Live! 360 conference Tuesday in Orlando, Fla.

Sterling, a 25-year Microsoft veteran, and Ted Pattison of Critical Path Training, delivered the session, "Microsoft BI -- What's New for BI Pros, DBAs and Developers." Their talk centered on demos of Power BI, but also touched on roadmaps for elements of Power BI, and covered the growing role of PowerApps and Flow. The main idea behind PowerApps is to allow business users, without coding, to pull from either simple or complex organizational data sources and create shareable business apps that are usable from mobile devices or in a browser.

All of Microsoft's simplification efforts are driving an explosion of BI usage among organizations. According to a slide from the presentation titled "Power BI by the numbers," there are 11.5 million data models hosted in the Power BI service, 30,000 data models added each day, 10 million monthly publish to Web views, and 2 million report and dashboard queries per hour.

Charles Sterling, senior program manager in the Microsoft Business Applications Group, discusses Power BI improvements during his keynote for the SQL Server Live! track of the Live! 360 conference Tuesday in Orlando, Fla.

Charles Sterling, senior program manager in the Microsoft Business Applications Group, discusses Power BI improvements during his keynote for the SQL Server Live! track of the Live! 360 conference Tuesday in Orlando, Fla.

Yet one of the biggest obstacles right now preventing business users from running even more wild with the concept is not having access to that organizational data. While a DBA creating a more sophisticated application has, or can quickly get, permissions for the underlying organizational data, the story is different for most business users.

"For [business users] to create an app that goes out and collects data is relatively difficult," Sterling said. "That's what PowerApps is going to enable business people to do in the near future. You can do it right now, but we are actually integrating it into Power BI."

A related concept on the roadmap will actually require more organizational communication across the business, including DBAs educating users to help set expectations. From within their dashboard, business users will be able to use PowerApps to update the database on the back end, a PowerApps roadmap feature called Write Back.

Sterling said the Write Back feature is one of his favorite new features, but he suggested it may be a bit of a struggle for DBAs as business users get going with it.

"For DBAs, empowering business users with the tools to directly update and collect data will likely open a whole new host of problems. Take, for example, a business user updating data being fed into a Hadoop cluster. It is entirely likely that the processing could take hours to days to propagate into the BI system they are viewing. Business users will expect those updates in real time," Sterling said in an interview.

Another big element of the roadmap with business users in mind is storytelling in the Power BI Desktop, Sterling said.

During a demo, Pattison clicked on slicers in a graph within Power BI that were increasing or decreasing. The example application brought up a sentence, based on the underlying data, explaining in business terms what was driving the increase or decrease.

"We're going to continue playing out this whole storytelling [approach]," Sterling said. In another example of such storytelling, Sterling and Pattison ran most of their presentation out of bookmarks in Power BI rather than PowerPoint. The bookmarks recall a preconfigured view of a report page, and pull live data when the bookmark is displayed.

Another demo during the session that caught attendees' attention involved Power BI Report Server, an enterprise reporting solution that received an update release on Nov. 1. Blue Badge Insights Founder and CEO Andrew Brust found the walkthrough of the Power BI Report Server one of the most important demos during the session. "It's an enhanced version of reporting services, but it lets you distribute reports on premises instead of having to push them to the cloud," Brust said.

Sterling said near-term items on the Report Server roadmap include more data sources, more APIs and integration with Microsoft SharePoint.

Posted by Scott Bekker on November 15, 20170 comments

When the ConnectWise Control team told him they were playing around with integrating smartphone cameras into their remote support offering, ConnectWise Chief Product Officer Craig Fulton wasn't sure at first that the idea added enough value to pursue.

After all, end users or field engineers could use products like Apple FaceTime for show and tell with subject-matter experts or senior support staff back at the main office. But the team quickly sold him on the idea, and his demo of the technology with ConnectWise CEO Arnie Bellini was an audience favorite during the main keynote at the company's IT Nation show last week in Orlando.

The official name of the video streaming technology, expected to be available in the second quarter of 2018, is ConnectWise Perspective.

On the ConnectWise platform side, Perspective includes a browser plug-in and integrations with Control (formerly ConnectWise ScreenConnect), as well as integration with ticketing and management in products like ConnectWise Automate. But that's for the tech back at the MSP's headquarters.

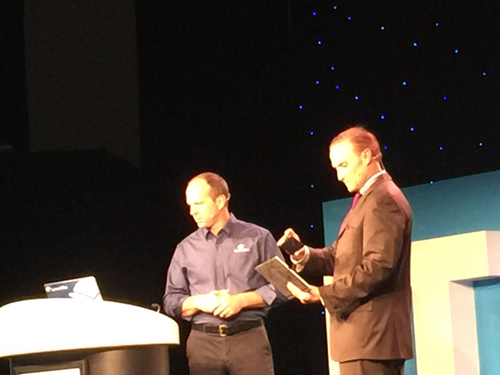

ConnectWise CEO Arnie Bellini (right) points his smartphone camera at his tablet, and ConnectWise Chief Product Officer Craig Fulton (left) streams the camera view to his PC. The on-stage preview of ConnectWise Perspective generated a lot of buzz among MSPs at IT Nation. (Source: Scott Bekker)

ConnectWise CEO Arnie Bellini (right) points his smartphone camera at his tablet, and ConnectWise Chief Product Officer Craig Fulton (left) streams the camera view to his PC. The on-stage preview of ConnectWise Perspective generated a lot of buzz among MSPs at IT Nation. (Source: Scott Bekker)

At the customer site, all the user needs is an iPhone or an Android phone. The headquarters tech would send a URL to the field tech or the end user. By simply tapping the link and entering a code, the on-site user's camera feeds live video into the back-end system, allowing the headquarters tech to see what the user is seeing. That technology is built on the Web Real-Time Communication (WebRTC) protocols and APIs.

With Perspective, pointing the camera at a bar code on the underside of a laptop can instantly give the tech back at the main office all the identifying information about that system. Use of the camera will also cause the session to automatically integrate with tickets and be recorded for billing purposes.

ConnectWise is also working on a feature for Perspective that it's calling Canvas. As a user moves the camera around, the images will be stitched together, similar to the way smartphone cameras build panoramic photos. A tech will be able to keep the canvas open in a separate browser tab from the feed, building a map of an entire room. That map, or canvas, will let them direct the on-site user to other areas. The headquarters tech is also supposed to be able to direct a small box within the smartphone camera display to lead the user's focus toward those things that are important to the tech, Fulton explained in an interview.

Use of technologies like FaceTime introduced some problems in the past that Perspective would address, Fulton said. For one thing, the customer then has a technician's personal phone number, introducing a privacy issue and creating a temptation for the customer to reach out to that tech any time they have a problem, regardless of whether the tech is on duty or not. Additionally, any FaceTime-style interactions fall outside of an MSP's billing and documentation processes.

Potential usage scenarios are extensive. On the one hand, there are the junior tech/senior tech scenarios, where a junior tech can get hands-on experience in the field and stream back to a senior tech for training or advanced troubleshooting.

Additionally, there's augmented self-support for customers. Anything with blinking lights and a confusing array of buttons, ports or switches presents an opportunity for Perspective, especially supporting audio/visual equipment, physical security devices or networking gear.

"For all of the things that really require having to stand in front of it, it's going to be super useful. It's going to turn the customer into a technician," Fulton said. "You see less of a need for field engineers. With cloud, the reach is getting further."

Posted by Scott Bekker on November 13, 20170 comments

ConnectWise, which is touting its many integrations and industry openness as a differentiator in an increasingly competitive market, previewed a new developer kit that will make it faster and easier for partners to build on its platform.

CEO Arnie Bellini unveiled the ConnectWise Developer Kit on Thursday in the opening keynote of the company's IT Nation conference in Orlando, Fla.

"This is about giving you a tool that will generate code automatically and lets you very quickly add to the ConnectWise platform with your solution," Bellini said of the kit, which is in a pilot phase after a two-year development process and is expected to be generally available in the second quarter of 2018.

The company currently boasts more than 200 third-party integrations, developed over the years through co-engineering projects, APIs and SDKs.

Bellini said the new kit will streamline integrations for those third parties, but will also make it possible for the company's managed service provider (MSP) customers to tailor the ConnectWise platform to their unique needs or to create their own connections to key applications that aren't already integrated.

ConnectWise CEO Arnie Bellini announced a new developer kit and angel investment fund at IT Nation to spur vendor and partner integrations. (Source: Scott Bekker)

ConnectWise CEO Arnie Bellini announced a new developer kit and angel investment fund at IT Nation to spur vendor and partner integrations. (Source: Scott Bekker)

"This developer kit is not just about our vendor partners, but it's also about you and especially our larger partners," Bellini told many of the 3,500 attendees during the opening keynote.

In a short on-stage demo, ConnectWise Chief Product Officer Craig Fulton built one of the integrations, called a pod, in about a minute, joking that he's not a developer, but he can drag and drop.

"We'll still give you the APIs, we'll still give you the SDKs, but this is the tool that we see that unifies this ecosystem," Fulton said.

In conjunction with the new tool, Bellini offered a tantalizing hint of new funding ConnectWise will make available for partners to further build out the ecosystem.

"We have raised a fund that's up to $10 million at this point, and we hope to get it up to $25M," he said. "We want to be angel investors for those of you that can help us connect this ecosystem together." In an interview later, Bellini said the angel investment fund doesn't have a name yet, but will be run out of ConnectWise Capital.

Meanwhile, ConnectWise is moving forward with its traditional style of partnership/API/SDK integrations. The company was joined on stage by Nirav Sheth, vice president of Cisco's Global Partner Organization for Solutions, Architectures & Engineering, to talk about the companies' new partnership effort announced last week. ConnectWise and Cisco are working together on ConnectWise Unite, which will allow Cisco partners to do cloud-based management, automation and billing for Cisco Spark, Cisco Meraki, Cisco Umbrella and Cisco Stealthwatch.

As a privately held company, ConnectWise finds itself surrounded by IT service management companies with private equity backing, including Kaseya, Continuum, SolarWinds MSP and Autotask, which just combined with Datto. Last month, former Tech Data CEO Steve Raymund joined the ConnectWise board of directors and is helping the company evaluate options for outside financing.

Posted by Scott Bekker on November 09, 20170 comments

While DevOps is a key trend for IT departments, Microsoft hopes to seed a new partner ecosystem within the Azure Marketplace around a related idea that it's calling "Ap/Ops."

The immediate evidence of the effort is general availability this month of Managed Applications in the Azure Marketplace, which is Microsoft's 3-year-old catalog of third-party applications that have been certified or optimized to run on the Azure public cloud platform.

Managed applications would be different from regular applications in the Azure Marketplace. Where a customer would deploy a regular application to Azure by themselves or have a partner deploy it, a managed application is a turnkey package. The partner who develops the solution would package it with the underlying Azure infrastructure, sell it as a sealed bundle and handle the operations, such as management and lifecycle support of the application, on the customer's behalf.

Corey Sanders, director of compute for Microsoft Azure, described that packaging of the application and the operations as "Ap/Ops" in a blog post announcing managed applications in the Azure Marketplace.

"Managed Service Providers (MSPs), Independent Software Vendors (ISVs) and System Integrators (SIs) can build turnkey cloud solutions using Azure Resource Manager templates. Both the application IP and the underlying pre-configured Azure infrastructure can easily be packaged into a sealed and serviceable solution," Sanders said.

Customers can deploy the managed application in their own Azure service, where they are billed for the application's Azure consumption along with a new line item for any fees the partner charges for lifecycle operations.

Sanders presents the Azure Marketplace offering as a first in public cloud. "This new distribution channel for our partners will change customer expectations in the public cloud. Unlike our competitors, in Azure, a marketplace application can now be much more than just deployment and set-up. Now it can be a fully supported and managed solution," he said.

Three companies were ready to go last week with managed applications for sale in the Azure Marketplace -- the OpsLogix OMS Oracle Solution, Xcalar Data Platform and Cisco Meraki.

Posted by Scott Bekker on November 06, 20170 comments