News

After Partner Pilots, Azure Digital Twins Hits Public Preview

- By Kurt Mackie

- October 16, 2018

Azure Digital Twins, a new Microsoft platform for representing buildings and outdoor spaces, is now in the public preview stage.

Microsoft first described Azure Digital Twins at last month's Ignite conference, touting it as an Internet of Things (IoT) service that brings together a bunch of Azure capabilities. Azure Digital Twins can leverage sensors to check things like room temperatures or whether a room is occupied or not. The platform can be used by organizations, and Microsoft also permits its partners to build applications for it.

Azure Digital Twins has been used by Microsoft's partners to map building spaces and elevator designs, while also providing access to operations and maintenance information. It's been used outdoors to support electric vehicle charging stations layouts. Microsoft also sees the platform as being useful for supporting city and campus applications. Partners that have used the platform to build solutions include Allegro, Adger Energi, CBRE, Iconics, L&T Technology Services, Steelcase, Willow and Winvision.

Microsoft offers "nested-tenancy capabilities" with the Azure Digital Twins platform. The nested-tenancy capabilities permit partners to "build and sell to multiple end-customers in a way that fully secures and isolates their data," according to Microsoft's announcement.

The concept for Azure Digital Twins comes from the physical product world where duplicates of a product get built for testing purposes. Microsoft is taking a different approach by modeling the environment and then monitoring what gets added to the environment, according to an explanation in this Microsoft Channel 9 video.

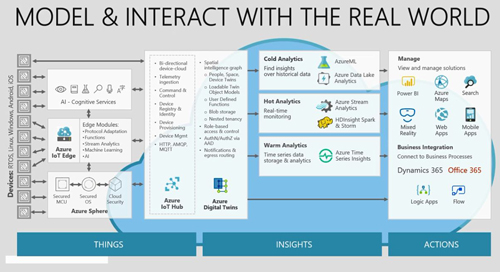

Azure Digital Twins works with the Azure IoT Hub, which is used for managing Internet of Things devices. The basic components of Azure Digital Twins include a spatial intelligence graph, role-based access control for security, Azure Active Directory authentication and "notifications and egress routing" (see chart).

[Click on image for larger view.] Diagram showing Azure Digital Twins platform in relation to other Azure services. (Source: Microsoft Channel 9 video)

[Click on image for larger view.] Diagram showing Azure Digital Twins platform in relation to other Azure services. (Source: Microsoft Channel 9 video)

The spatial intelligence graph surfaces the "relationships between people, places and devices." It supports blob storage, plus the ability to associate things like documents, manuals, maps and pictures as "metadata to the spaces, people and devices represented in the graph." Microsoft provides "predefined schema and device protocols," which it calls "twin object models," that can be used by developers for the customization of solutions.

Microsoft is also touting the ability of Azure Digital Twins to integrate with various Azure services, such as "Azure analytics, AI, and storage services, as well as Azure Maps, Azure High-Performance Computing, Microsoft Mixed Reality, Dynamics 365 and Office 365."

It's possible to test the Azure Digital Twins preview. There's documentation, as well as a "Quickstart" sample project written in C#.

In addition to the Azure Digital Twins preview, Microsoft announced a preview of the Vision AI Developer Kit, which is used by developers to create "intelligent apps" for sensor devices. It can be used with the Azure IoT service "to easily deploy AI models built using Azure Machine Learning and Azure IoT Edge," according to Microsoft's announcement. The kit includes a device that uses Qualcomm's Visual Intelligence Platform, providing hardware acceleration for the artificial intelligence capabilities.

Microsoft also updated its Azure IoT Reference Architecture to version 2.1, and it released a preview of the Open Neural Network Exchange (ONNX) runtime. The open source ONNX runtime works with the Azure Machine Learning service, Windows Machine Learning APIs for the Windows 10 October 2018 Update and .NET apps.

About the Author

Kurt Mackie is senior news producer for 1105 Media's Converge360 group.