In-Depth

How Containers Fit into the Microsoft Stack: A Visual Guide

Microsoft is moving faster than ever to embrace containers on Microsoft Azure and to build the technology into the next version of Windows through a major partnership with Docker. Here's a visual guide to what all the fuss is about.

- By Scott Bekker

- June 03, 2015

There's been a virtual frenzy in Redmond around containers in recent months. While containers are a relatively mature and well-understood concept in the Linux community, containerization in the OS is striking much of the Microsoft ecosystem like a bolt out of the blue.

A major example of Microsoft's new eagerness to embrace the Linux and open source communities is evident in its significant partnership with Docker Inc. to welcome containers into Microsoft Azure and, soon, into Windows Server itself.

Look no further than the Build conference for evidence of Microsoft's enthusiasm for Docker. Microsoft Azure CTO Mark Russinovich took to the Build stage in late April, not with a Microsoft shirt or even the casual, button-down shirt that presenting 'Softies normally wear. Instead, he sported a Docker T-shirt emblazoned with that Silicon Valley company's trademarked logo of a whale with boxes that look like shipping containers on its back.

If you're finding the speed of Microsoft's moves around containers and open source software in the Satya Nadella era a little disorienting, you're not alone in feeling off balance. In a recent research note, "Can Less OS Really Be More?" IDC analysts Al Hilwa, Al Gillen and Gary Chen addressed recent moves by Microsoft, Red Hat Inc. and CoreOS Inc. on containers. Of Microsoft, the analysts said, "It may seem odd to include Microsoft in this discussion, but in the last 12 months, the rate of change in the company has accelerated beyond anything IDC has seen in recent history. Executives are considering, and executing on, moves that previously would have been unthinkable for the software giant, especially in terms of adopting, contributing to and interoperating with open source technologies."

Is it a change of heart for Microsoft, a company whose previous CEO Steve Ballmer equated open source with cancer? Ballmer's attitude softened in later years, but it's also certainly true that Nadella's Microsoft views Linux and open source more, well, openly. There's more than good feeling at play, though, as the IDC analysts noted: "In the platform space, Microsoft has been focused on a high degree of product innovation to ensure that it can keep its technology competitive with the fast-moving open source world, which is being heavily leveraged by its competitors like Google and Amazon AWS. Microsoft's quick reaction to adopt Docker in the second half of last year can be attributed to this new-found agility."

Container technology is moving so fast and embedding so deeply into the Microsoft roadmap that it's hard to keep track of how all the pieces fit together, especially for those who are steeped in the Microsoft stack but are less familiar with developments on the open source side. RCP interviewed executives at Microsoft and Docker, checked in with industry analysts and reviewed technical documents to create this visual guide to help make clear what containers are, how they differ from virtualization, and what their current and future role is in Azure and Windows Server.

Figure 1.

Figure 1.

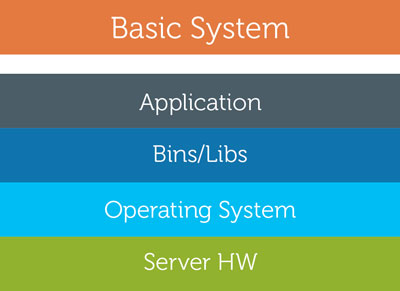

Basic System (Figure 1)

There are a lot of ways to diagram an OS. That's part of the reason it's hard to figure out at first what's going on with containerization -- everyone describes and illustrates their approach differently. What we'll do in this feature is use a consistent model of diagrams to provide an apples-to-apples, and very high-level, comparison to explain what containers are doing and how they do it differently from traditional systems and virtual machines (VMs). The layered approach we'll use here is borrowed and expanded upon from two simple diagrams in an About page on the Docker site comparing Docker containers to VMs.

To establish a baseline, we'll look at a traditional server running one application. On the lowest level is the server hardware, which includes the CPU, the memory and the I/O for storage. Above that is the OS. One level above that are the binaries and libraries -- think of the Microsoft .NET Framework or Node.js -- that applications rely on to run. At the top is the application itself.

Figure 2.

Figure 2.

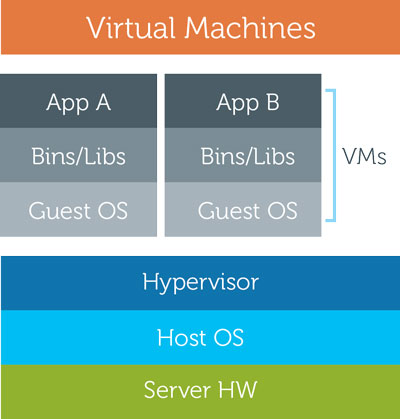

Virtual Machines (Figure 2)

The most common question about containers is: How do they compare to VMs? So let's quickly review what a VM is doing. In Figure 2, you see the server hardware at the bottom, with a host OS running on the bare metal. Next, you have a hypervisor, which could be from VMware Inc. or Microsoft Hyper-V or a slew of other options. On top of the hypervisor are two VMs. Each VM has a full guest OS, and each is loaded with binaries and libraries and an application. The hypervisor presents itself to each guest OS as if it were the server hardware, so it looks to the guest OS as if it's controlling the server hardware directly. Instead, the hypervisor is provisioning the CPU, memory and I/O resources between the two guest OSes in their VMs according to the priorities an administrator set in the hypervisor. So, now we're all caught up to the year 2002.

Figure 3.

Figure 3.

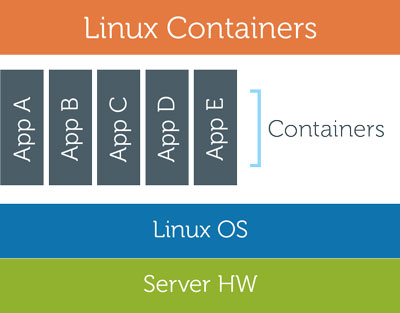

Linux Containers (Figure 3)

VMs are extremely popular, and they're gradually taking over the datacenter and much of the IT world from the basic system we looked at in Figure 1. There are some limitations, though, which some of the biggest cloud providers began bumping into in the midst of their mega-datacenter buildouts a few years ago. Google and others began experimenting with containers for their massive scale operations. VMs are good for some aspects of scale operations. You can move the images around from server to server, quickly increase capacity by spinning them up relatively quickly and fail them over relatively easily. The speed in starting them up, though, while quicker than a basic system, left something to be desired. What Linux container pioneers realized was that you could put the application in a container on the OS kernel. That way, at startup, the OS kernel is already running, cutting significant time out of the startup sequence.

The concepts evolved into the LXC and cgroups, components that were built into the most popular Linux distributions over time. LXC, which is relatively synonymous with Linux containers, and cgroups provide isolation for the application. Much like with VMs, it looks to the application like it has control of key OS functions. In reality, the container is boxed off with its own namespace, file system and so on, and the container-related components are prioritizing the resources of the entire OS. That way the application can't run away with system resources, nor is it aware that other applications are running on the system.

A Linux OS can conceivably run hundreds or thousands of isolated containers -- a level of scalability that a server running applications in individual VMs can't match because each isolated unit must also carry the load of a full OS.

Mike Schutz, general manager of Cloud Platform Marketing at Microsoft, identifies the speed at which containers start up as a very attractive part of containerization. "What we're finding in the early deployments of container technologies today in the Linux world and going forward in the Windows world is the ability to start up new processes and instances of applications very, very rapidly, in seconds rather than minutes, is appealing in distributed systems and cloud-style architectures."

Figure 4.

Figure 4.

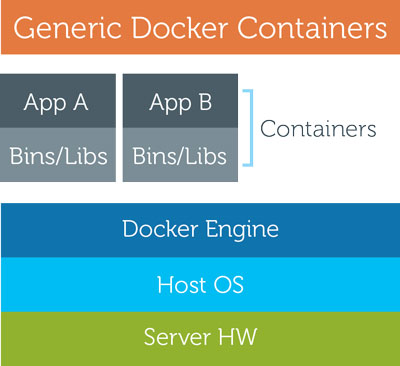

Docker Containers (Figure 4)

Although they've been around since the last decade, containers got a huge buzz boost from the 2013 debut of Docker, a project and company that took the container idea in a new direction. Originally, containers were designed to solve an operations problem involving isolation from system resources and speed of provisioning new services in an environment of extremely variable loads, such as Google and Twitter.

The problem that Docker Founder and CTO Solomon Hykes was trying to solve was all about developers. Looking at the development cycle on the Linux side, developers face tricky issues. The basic systems of Figure 1 are few and far between as development targets. Instead, applications call for services from various sources, running various OSes and involving various development frameworks, all with different version numbers. Once the app is written, the developer has to move it to a test system and then to a production server or a cloud environment. All of those environments involve troubleshooting just to get the versioning of the OSes and binaries and libraries correct. When Hykes looked at containers, he saw the possibility to package up all of the application's dependencies inside the container, with only a few requirements for the basic OS kernel functions provided through things such as LXC and cgroups. If all of the applications' dependencies are bundled into the container, it would be much quicker to move an application from dev to test to ops.

"This whole process of Docker containers allows me to build, ship and run applications in a fraction of the time that I was previously able to do it," says David Messina, vice president of Enterprise Marketing at Docker. "So you have organizations that have gone from effectively nine-month cycles from code changes hitting production to them literally making hundreds of changes a day to their business applications."

To enable that application bundling, known as Dockerized applications or Docker containers, the team at Docker created a Docker Engine. Represented in the diagram as a layer between the OS and the containers, the Docker Engine is sometimes loosely referred to as the hypervisor of a container environment. Its functions include tools to help developers build and package applications into containers, and it's the runtime for production Docker containers. Later, Docker would create its own specialized brand of Linux container, called libcontainer that would also be incorporated into many Linux distributions.

The Docker Engine also provides the tools for developers to download containerized applications created by the Docker community and incorporate those applications into their own solutions.

That community was a key selling point for Microsoft. Known as the Docker Hub, the hosted registry contains more than 100,000 containerized applications or services that are available to the broader community. The Docker Engine also includes tools to allow developers to upload their containerized apps to the Docker Hub.

Figure 5.

Figure 5.

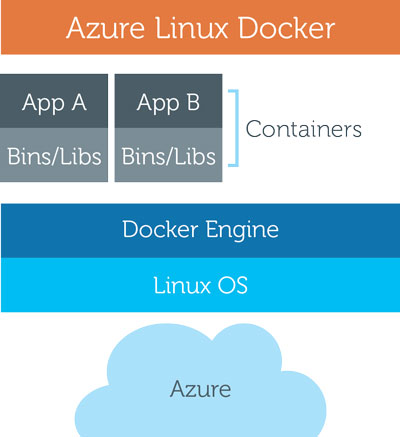

Docker on Azure (Figure 5)

Late last year, Microsoft and Docker took a big first joint step in enabling Docker on the Azure cloud. Redmond had laid the groundwork with its earlier support for Linux VMs. In the Infrastructure-as-a-Service (IaaS) scenario on Azure, a user or developer can spin up a VM on the Azure cloud, which includes a Linux guest OS. The Docker Engine then installs on top of that guest OS, with containers on top of the Docker Engine, much like the Docker Container configuration in Figure 4.

Two caveats here. One is that generic Linux containers of the non-Docker variety were possible anytime a user spun up a Linux machine on Azure IaaS. The other is that it was possible to run Docker containers on those Linux VMs, as well, without specific action by Microsoft.

But Microsoft and Docker set about working closely together to make things seamless for Docker on Azure and to lay the groundwork for the future of the partnership.

"Because of the popularity of Docker, we've done a lot more direct engagement to help the Docker community use Azure when they want to build Dockerized applications," Schutz says. Adds Messina, "We continue to collaborate, and not only is the Docker Engine available in their marketplace, there are a whole bunch of other things that we're continuing to do to collaborate on Azure. For example, one of the integrations that we did was to set up a model where with one simple command, a Docker Engine can be up and running in Azure."

Figure 6.

Figure 6.

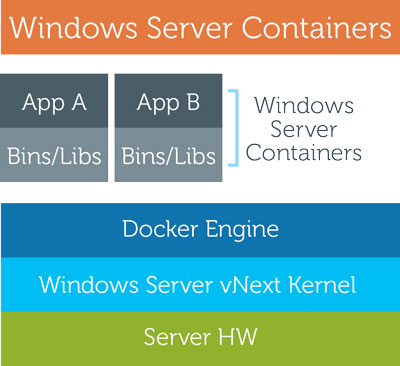

Windows Server Containers (Figure 6)

The deeper work between the companies is going into Windows Server 2016, the next generation of Windows Server slated for general availability next year. At that point, Microsoft plans to roll out Windows Server Containers, an official name for Windows Server technology that, in Schutz's words "would be analogous to the LXC container capabilities that exist in the Linux world today. So we would do file and registry virtualization, provide resource controls similar to what cgroups in Linux does, network virtualization so that each application or each container could have its own IP address and then namespacing to provide a layer of isolation so that the container doesn't have access outside of that."

Figure 6 shows what Windows Server Containers would look like with the Docker Engine atop an on-premises server. However, those Windows containers could also run in an Azure IaaS cloud.

Interestingly, a Microsoft spokesperson also said that the Windows containers will not depend on Docker technology to work. Visually, this would be like taking Figure 3 and replacing the Linux OS with Windows Server 2016.

Docker executives have very high hopes about the market opportunity for them of bringing Dockerized containers to the Windows platform. "It's highly strategic," Messina says. "If half the world are Windows developers in the context of enterprise applications, this is an amazing opportunity for the project and for the company, but also for developers. We look at it as doubling the overall opportunity for Docker as opposed to being any kind of a percentage of a Linux opportunity."

While the Dockerized apps of Windows Server Containers won't be able to run in Linux containers nor vice versa, both Microsoft and Docker see potential in the ability for developers to create distributed applications by coordinating services from Linux containers and Windows containers. "Every time we talk to an enterprise, obviously, they have a huge blend of Linux and Windows," Messina says. "They're excited about the fact that there will be freedom of choice between Linux and Windows."

Nano Server

Another development in Windows Server 2016 is relevant for containers. That's Microsoft's planned introduction of a Nano Server deployment option for Windows Server. Similar to Server Core, which arrived in Windows Server 2008, Nano Server aims to shrink the size of the code base of Windows Server. While Server Core involved roles and only managed to be somewhat smaller than a full installation of the OS, Microsoft seems to be making much more dramatic progress with Nano Server.

Gone are elements like fax server and print server capabilities, the GUI and anything that someone running a large-scale datacenter wouldn't need. The end result in Microsoft's current estimate is an OS that saves 93 percent to 95 percent of the disk footprint used by a regular Windows Server.

As with Server Core, a primary motivation is security and uptime. Removing so many components reduces the attack surface and limits the number of patches that need to be applied and, therefore, the number of times a system has to be taken offline for security updates. Like Server Core, Nano Server will be a deployment option rather than an edition of Windows.

A secondary benefit is containers. Designing absolute bare-bones OSes to support containers is a design goal of Linux vendors, such as CoreOS, developer of a thin OS of the same name, and Red Hat, which developed Enterprise Linux 7 Atomic Host along the same lines. Red Hat and CoreOS distributions of Linux are both currently supported in Azure VMs.

Microsoft is already discussing the possibilities for Nano Server as an ideal host for Docker containers. To get a sense of how high a priority Microsoft is putting on containers as a design goal for Nano Server, consider that Microsoft is making an OS that is one-twentieth the size of the regular version. Even as Microsoft must ruthlessly cut features and functions from Windows Server for Nano Server, engineers are making room for the new Windows Server Container functionality. It's like a new hire in the midst of a mass layoff.

Circling back to the VM-versus-containers discussion, meanwhile, Nano Server should also reduce the delta between VMs and containers. If Nano Server is used as a guest OS, its ultra-slim profile would increase the number of VMs that can run on the machine and speed the startup time for VM-based applications.

Figure 7.

Figure 7.

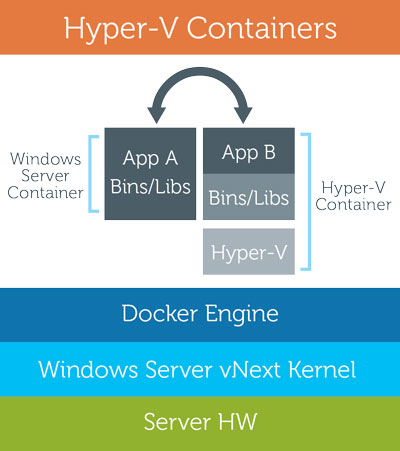

Hyper-V Containers (Figure 7)

Most of what Microsoft is doing with containers can be represented fairly easily with the architectural diagrams. However, the software giant is innovating in one area, and the messaging and public explanations aren't necessarily fully baked. That result is hardly surprising given that it's a new area, and we're talking about pre-beta server software. The cutting-edge code involves what Microsoft is calling Hyper-V Containers.

The way to think about Hyper-V Containers is that they offer both OS virtualization (container) and machine virtualization (VM) in a slightly lighter-weight configuration that will have less of a performance hit than a traditional VM. Hyper-V Containers are supposed to be interchangeable with Windows Server Containers. In other words, Windows Server Container applications that are pushed or pulled from the Docker Hub or a corporate repository can be placed in either a regular Windows Server Container or a Hyper-V Container without modification.

We're taking a stab at a visual representation in Figure 7. At this point, it looks like there would be the server hardware layer at the bottom with a Windows Server 2016 OS running on it. Running on Windows Server is the Docker Engine, which would provide the platform for a regular Windows Server Container, the same as in Figure 6. The new piece, the Hyper-V Virtualization, appears to slide in between the Docker Engine and the Windows Server Container.

It was not entirely clear at press time what services the Hyper-V Containers are going to provide that's different from what the Windows Server Container provides. Microsoft's early marketing of Hyper-V Containers involves a security argument that it's possible for a containerized app from an untrusted source to get root access to the OS kernel. From Docker's perspective, that's an old and solved security issue, and the more concrete benefit involves allowing organizations that have invested heavily in Hyper-V infrastructure to manage containers with consistent tooling. We'll update this section online as details emerge in the coming months.

DevOps Paradigm

Containers promise to help bring developers and operations closer and enable the faster development lifecycle possibilities that are fueling much of the recent talk about a DevOps paradigm. In the channel, as Microsoft increasingly encourages partners to focus on developing their own IP, containers could offer a way to make delivery of vertical applications to multiple customers faster and less dependent on customers having specific OS versions installed. By product, the implications cross the roadmap from the Azure cloud to Windows Server 2016 to Hyper-V to Nano Server. The technology picture will come into sharper focus as Microsoft and Docker continue their partnership and as product milestones approach. But it's already clear from the outlines that containers are the next little big thing for the Microsoft ecosystem.