In-Depth

Why IoT Sucks (And Why That's Good for Partners)

As promising as it is, IoT has problems -- from security to reliability to management. And problems, of course, are good for Microsoft partners who can offer solutions.

- By Howard M. Cohen

- May 01, 2017

In their continuing search for new avenues for their businesses, many resellers, services providers and other Microsoft partners might skip right past the Internet of Things (IoT) for many reasons, many of which are based on vast misconceptions.

Some of the most common:

- It's just too new. It's still going to be years before there's any real opportunity in the IoT.

- It's just not clear where the opportunities are for companies like mine.

- There's no IoT and there's not ever going to be one. It's all marketing hype, just like "cloud."

- There's nothing to these "things." They don't need any kind of servicing. Plug 'n' play.

All Wrong

The great news is that all of these apprehensions miss the mark completely. Let's start by looking at the idea that the IoT is too new and it's going to be years before there's any "there" there.

The number of "things" connected to the Internet had already exceeded the number of people on earth by 2008, according to Cisco Systems Inc., so IoT has arguably been a global reality for nearly a decade. What's going to happen next is that hockey-stick moment when the sheer volume of things expands geometrically. Statista Inc. forecasts more than 50 billion things by 2020, up from 22.9 billion last year. This creates huge and growing IT partner opportunities.

While it's true that there have been more "things" on the Internet for quite some time, it's also true that most of these "things" are cheaply made, poorly resourced and highly fallible. Bottom line? These "things" suck.

Why Do These 'Things' Suck?

There are a variety of challenges posed by the things of IoT. Security has been one of them, based mostly on broadly acknowledged problems. Up until last fall, the security of the IoT was a mostly philosophical discussion. Then came Mirai, and the lack of security for IoT devices became a global kerfuffle.

At its core, Mirai is malware that scans the Internet for vulnerable devices. Leveraging the fact that few users ever change the default passwords on webcams and other IoT devices, and that, in fact, passwords are hardcoded and unchangeable for many of the devices, Mirai tried a number of standard username/password combinations to take over thousands of IoT devices and herd them into a giant botnet reporting to command and control servers.

Each device is weak in terms of its individual compute capability, but their sheer numbers create the potential for distributed denial of service (DDoS) attacks of unprecedented scale.

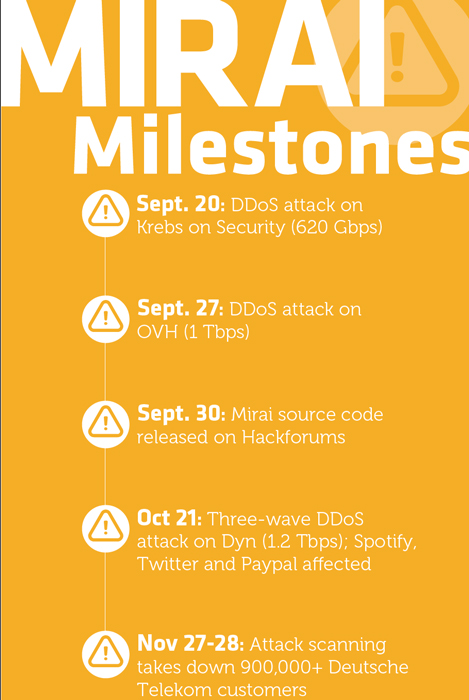

The first strike was a huge DDoS attack on Sept. 20 against Krebs on Security, the eponymous Web site of journalist Brian Krebs. Attack volume reached the jaw-dropping level of 620 Gbps.

The next high-profile hit came in late September, with French hosting company OVH sustaining DDoS attack volumes of 1 Tbps. A few days later, a user going by the screenname "Anna-senpai" posted the Mirai source code on Hackforums and the community went to work on the code.

IoT's real coming-of-age security moment, though, started at 7 a.m. (ET) on Friday, Oct. 21. That was when the first of three Mirai-based DDoS attacks hit Dyn, a major domain name services provider. As IoT devices, particularly webcams sold by XiongMai Technologies, flooded the DNS provider with malicious requests, the attack disrupted major Web sites, including Spotify, Twitter and PayPal.

Given Dyn's aggressive mitigation efforts, aided by Internet infrastructure stakeholders, it's hard to know exactly how intense the Mirai attacks got, but some reports were as high as 1.2 Tbps, nearly twice the volume of the first public attack against Krebs on Security. In an after-action statement, Dyn Executive Vice President of Product Scott Hilton estimated that the attack involved up to 100,000 malicious endpoints, many of them part of a Mirai-based botnet. (Initial device count estimates were much higher due to storms of legitimate retry activity as servers attempted to refresh their caches due to attack interference.)

The Dyn attack was not the last use of Mirai. In late November, another Mirai botnet attack on home routers in Germany knocked more than 900,000 customers off the Internet. The utility of the Mirai code isn't expected to sunset any time soon. Even once the specific vulnerabilities Mirai can exploit are narrowed to make it close to irrelevant, the exponential growth in IoT device usage means similar malware is guaranteed to continue to emerge to exploit such a rich target.

In a post on its official blog, security giant Symantec Corp. quantified how heavy the IoT threat already is. "The average IoT device is scanned every two minutes. This means that a vulnerable device, such as one with a default password, could be compromised within minutes of going online."

Even when they're not under attack, the everyday devices, including security cameras, cars, refrigerators, switches, thermostats and sensors of all kinds, have problems.

If Rodney Dangerfield sat on the Internet Engineering Task Force (IETF), he would tell you: "Things don't get no respect." Just look at what the IETF called its dedicated routing protocol for "Small Objects networks" when it approved it in 2011: the "Routing Protocol for Low-Powered, Lossy Networks" or RPL. It means, in a word, that these Internet-connected things suck.

JP Vasseur, Cisco fellow and co-chair of the IETF Working Group responsible for RPL standardization, offered a concise overview of the challenges in a blog post at the time of the RPL approval. "The industry has for some time been working to develop new IPv6 protocols designed specifically for constrained networking environments such as IP smart objects. IPv6 is the 128-bit Internet Protocol addressing system that is slowly replacing the original 32-bit IPv4 we're all accustomed to," Vasseur wrote. "These smart objects typically have to operate with very limited processing power, memory and under low energy conditions, and as a result, require a new generation of routing protocols to help them connect to the outside world. When compared to computers, laptops or even today's generation of smart phones, traditional IPv6 protocols tend to work less effectively or consume energy at too rapid a rate for these small, self-contained devices or sensors that are often powered by small batteries that are difficult to replace."

The challenge isn't limited to the new generation of sensor and control devices that are most closely associated with IoT. Those working with Hadoop since its introduction in 2006 enjoy tremendous increases in data handling speed thanks, in part, to the ability to create storage clusters across thousands of commodity-priced storage devices. As they do, they also realize that it's virtually impossible to avoid hardware failures with so many devices involved. The protocols and software they use must handle hardware failures at the application level in software. This provides yet another opportunity to differentiate their offerings through superior fault management. There are opportunities at every level of the stack for high-end developers to create protocol-level software that supplements the high-error rate of these low-cost hardware "things."

Clearly, there's also a significant opportunity available to channel partners in simply monitoring and maintaining the often low-quality and problem-prone devices themselves.

Opportunities Created by IPv6

It may seem tangential, but the explosion of IoT is accelerating pressure on a huge technological change that offers a related project opportunity for Microsoft partners. We've run out of IPv4 addresses to assign to things. In fact, we ran out long ago.

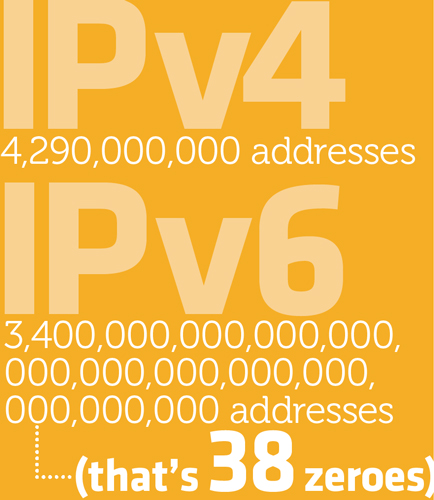

Every device on the Internet, and every device on private networks that uses the TCP/IP protocol, must have an IP address. Four numbers between 0 and 255 each separated by dots. If you calculate the number of permutations possible in this 32-bit schema, you'll come up with about 4.3 billion. On Jan. 31, 2011, the Internet Assigned Numbers Authority (IANA) distributed the last blocks of available addresses to the regional exchanges around the world, who each assigned them locally, finishing in September 2012.

As far back as 1992, the IETF recognized that the 4.3 billion addresses wouldn't accommodate the growth of the Internet forever, and announced a call for papers to replace the addressing scheme. The result, IPv6, uses a 128-bit structure as opposed to IPv4's 32-bit. The available number of addresses is 3.4 undecillion, a number so large as to be difficult to comprehend, but here's the comparison in approximated numbers:

To try to put it in some perspective, the standard size of one subnet in one IPv6 address is approximately the square of the entire IPv4 address space. In other words, it's more than enough addresses for the foreseeable future.

The Transition to IPv6

For many, the transition to IPv6 began years ago. Most analysts predicted that it would take years, but hybrid strategies would allow more time as users ran their networks using both address structures. Because the address structures are so different from one another, and IPv6 uses a different data packet structure, IPv4 devices and IPv6 devices cannot interoperate without using some form of gateway. The most popular co-existence hybrid strategies include tunneling, in which the IPv6 traffic is encapsulated into the IPv4 header, though this introduces additional overhead; dual-stack, which basically does the reverse using address translation; or cloud-based translation services.

The IoT, in which everything talks to everything using IP, requires many more IP addresses. Even now you can lock, unlock and start your car using your smartphone. Your tablet or your handheld can be used to control your home theater system and many appliances. Manufacturing shop floor measurements and controls are accomplished using tablets, as well. Cars have their own built-in Wi-Fi hotspots. All of this, coupled with the incredible popularity of bring your own device (BYOD) initiatives, drives the demand for more people to own more IP devices. It's not unusual for someone to own a PC, a tablet and a smartphone. Multiply that by the number of users in the world.

Then there's virtualization, which has allowed us to pack dozens of virtual machine (VM) server instances into a single server device. Some manufacturers are now introducing servers that can achieve VM densities in the hundreds of server instances. Each of those virtual servers requires an IP address.

Bottom line: The need for an incredible number of new IP addresses grows exponentially every day. The transition to IPv6 must be a top priority for anyone and everyone who uses the Internet. Yet, analysts tell us that the overwhelming majority of organizations haven't even begun planning yet. We're crawling when we need to speed past walking and running and start to fly. Note that every IPv6 transition requires two major projects with plenty of replacement hardware and months of services. The first will create a hybrid between IPv4 and IPv6 so everyone can continue using the entire Internet. Then, someday, we'll all need to shift to pure IPv6.

It should go without saying that securing IPv6 traffic will be intensely more sophisticated than IPv4, but let's put it in IoT perspective:

It's a summer day in Phoenix, Ariz. The temperature has hit 117 degrees. A hacker somewhere in the world has just hacked the Industrial Control System (ICS) for one of the taller buildings in the city, launching a DDoS that brings the industry-standard PC used to run the ICS to its knees.

No data is lost, corrupted or stolen, nor identities or identifying data, either. But the air conditioning has been shut down.

Within a very short time the building empties out as employees rush to escape the stifling heat. Think this sounds like science fiction? It's already happened, and will happen again. And again.

New Architecture for a New Age

Microsoft partners all remember when the world changed and Microsoft embraced open source after competing with it for decades. IoT was certainly a major motivator for this change.

Back when networks were new, manufacturers of storage products saw great logic in embedding the intelligence that ran their sophisticated storage systems directly into the products themselves. As such, we today think of storage-area networks (SANs) and network-attached storage (NAS) appliances as being "standard."

As cloud networks have grown in prominence, this has all changed. With users across a network edge that spans the global Internet, and applications becoming mobile as microservices housed and transported easily in containers crossing software-defined networks, it makes little sense for all of them to have to reach across the entire network to a storage appliance when they need to read or write data. It negates the whole advantage of cloud computing.

In response, manufacturers and developers have moved storage intelligence back out of the appliances and onto standard architecture servers where storage administrators and others enjoy more flexibility and more power over what they do with it. One great example of what they've done in this new environment is Ceph, an open source community project than enables the construction of storage clusters.

In a storage cluster, data is distributed to inexpensive commodity storage hardware devices located throughout the cloud network. Because all the data is replicated, device failure can easily be tolerated with no loss of data and minimal loss of throughput. Now the data can travel with the containerized microservice apps, giving users the full benefit available from their cloud.

Managing the IoT

Clearly there are opportunities for ISVs and vertical specialists in seeding IoT sensors throughout manufacturing floors and in countless other advanced solutions. But the problems with IoT mean there will be opportunities galore in IoT for less-specialized partners.

Though the term "managed services provider" has been rendered almost meaningless by the wide variety of channel players who leverage it, the need for monitoring and management reaches every facet of information technology. To name a few, there is performance management, outage management, Service Level Agreement (SLA) and Quality of Service (QoS).

Keep in mind that IoT includes systems like ICS, which require environmental sensors and controls at a highly granular level. Tomorrow's IT services provider will be called upon to manage many more physical attributes, such as maintenance and replacement of these devices and environmental testing to assure proper performance. Similarly, new communication services based on microphones and speakers installed into bus stops, train stations and other venues will also require testing, maintenance and periodic servicing.

There will be billions of devices to manage, along with more sophisticated services, larger namespaces, more intricate security and new physical adjacencies. The challenges will be many and the solutions lucrative.

In any language, that spells opportunity.