Report Finds Major Lags in Some Big Data Cloud Migrations from AWS S3

Test results published by cloud storage provider Nasuni this week suggest it's easier to move terabytes of data to Amazon Web Services S3 service than to Microsoft's Windows Azure or Rackspace's Cloud Files.

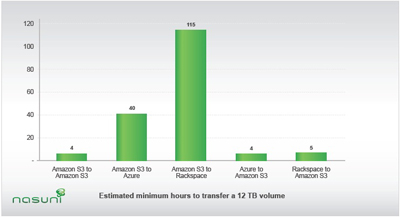

Nasuni found that moving 12 TB blocks of data consisting of approximately 22 million files to Amazon S3 (or between S3 storage buckets) took only four hours from Windows Azure and five hours from Rackspace Cloud Files. Going the other way though took considerably longer -- 40 hours to Windows Azure and just under a week to Rackspace Cloud Files from Amazon's S3.

[Click on image for larger view.] |

| Estimated minimum hours to transfer a 12 TB volume. (Source: Nasuni) |

Officials at Nasuni found the results surprising and wondered if the reason was due to the fact that Microsoft and Rackspace throttle down the bandwidth when writing data to their respective services. In the case of Windows Azure, Nasuni was able to reach peak bandwidth rates of 25 Mbps, which was deemed good, but it dropped off significantly during peak hours, said Nasuni CEO Andres Rodriguez.

"Our suspicion is that Microsoft is throttling the maximum performance to a common data set, to make sure that the quality of service is maintained to the rest of the customers, who are sharing their piece of infrastructure," Rodriguez said. "That's a good thing for everyone. It's not a great thing for those trying to get a lot of performance out of their storage tier."

While acknowledging it was his own speculation and Nasuni company had not conferred with Microsoft or Rackspace about the issue, a Microsoft spokeswoman denied Rodriguez's theory. "Microsoft does not throttle bandwidth, ever," she said. While Microsoft looked at the report, the company declined further comment, noting it doesn't have "deep insight" into Nasuni's testing methods.

Ironically, Windows Azure performed well in a December test by Nasuni, in which it ranked fastest when it came to writing large files. Amazon, Microsoft and Rackspace all performed the best in that December shootout, which is why they were singled out in the current test. (Also, Amazon is currently Nasuni's preferred storage provider.)

Conversely, Nasuni was able to move files from Windows Azure to Amazon much faster; when moving from Windows Azure to Amazon S3, Amazon received data at more than 270 Mbps, resulting in the 12 TB of data moving in approximately four hours. "This test demonstrated that Amazon S3 had tremendous write performance and bandwidth into S3, and also that Microsoft Azure could provide the data fast enough to support the movement," the report noted.

Nasuni acknowledged that writes are typically slower than reads in most storage systems and external bandwidth is far more limited than internal bandwidth. Also, Nasuni noted in the report explaining the results that the limit it hit could have either have been Amazon's write limit just as much as Microsoft's read limit -- both the result of their respective bandwidth capacity and infrastructure.

Engineers at Nasuni also noted they were surprised at the limits of Amazon's EC2 in regard to how many machines a customer can run by default -- only 20 machines (for more you have to contact Amazon). To bypass that limit, Nasuni combined machines from multiple accounts.

For its part, Rackspace officials were miffed as to why it took so much time to move data from Amazon to the Rackspace Cloud Files service. "The results were surprising to us but we are making efforts to understand how the test was run and understand where some of that limitation might have been coming from," said Scott Gibson, the company's director of product for big data. Unlike Microsoft, Gibson acknowledged there are cases when Rackspace does put some limits on requests. But he said the company just completed a significant hardware upgrade in mid-February to alleviate those situations. Nasuni conducted the tests between Jan. 31 and Feb. 8.

Gibson was skeptical that this was a burning problem among Rackspace customers. "I wouldn't say it's horribly common," he said. "We do have [some] customers who move large amounts of data between datacenters. If that level of performance was the norm, we would probably hear about it loud and clear."

Rodriguez insisted he had no axe to grind with either provider. In fact he suggested he'd like to see both companies and others be able to offer the ability to move large amounts of data between providers to give Nasuni the most flexibility to offer higher levels of price performance and redundancy. He emphasized the purpose of the test was to gauge how long it would take to move large blocks of data among the providers.

When conducting the tests, Nasuni moved data between the providers via encrypted HTTPS machines. The company did not store any data, which was encrypted at the source, on disks in transit. Nasuni scaled from one machine to 40 and saw higher error rates from the providers as the loads increased, though ultimately the transfers were completed after a number of retries, the report noted.

It would be hard to conclude from one test that anyone wanting to move large blocks of data from provider to provider would experience similar results, but it also points to the likelihood that swapping between providers is not going to be a piece of cake.

Posted by Jeffrey Schwartz on March 22, 2012